Radiology has been at the forefront of AI adoption in clinical practice. Startups in this space often promise rapid image interpretation, early disease detection, workflow optimization, and even diagnostic support. While these innovations offer exciting possibilities, it is critical for clinicians to understand both what these tools offer — and what they don’t.

This case study aims to equip clinicians with a framework for understanding, evaluating, and engaging with radiology AI solutions.

What Radiology AI Startups Typically Offer

- Automated Image Analysis

- Detection of findings (e.g., lung nodules, fractures, hemorrhages)

- Quantitative measurements (e.g., lesion size, volume, density)

- Triage alerts for critical findings (e.g., stroke, pneumothorax)

- Workflow Integration

- PACS/RIS integration

- Prioritization of cases

- Reduction in reporting times

- Decision Support

- Diagnostic suggestion based on pattern recognition

- Comparison with prior imaging

- Structured reporting assistance

Limitations and Cautions

Despite high sensitivity claims, many AI tools:

- Lack specificity, leading to false positives and overdiagnosis.

- Perform best in narrow, controlled conditions unlike real-world clinical variability.

- Are trained on limited datasets, often lacking demographic and pathological diversity.

- May not generalize across different imaging equipment, protocols, or populations.

Example: An AI that detects intracranial hemorrhage might identify chronic calcifications or artifacts as bleeds due to poor specificity.

Why AI Often Has High Sensitivity but Low Specificity

AI models are often trained to detect all potential positives to avoid missing true cases (false negatives). However, this can result in over-triggering:

- Algorithms err on the side of caution due to legal/clinical implications.

- Dataset imbalance or overfitting to rare findings amplifies false positives.

- Ground truths used in training may be based on radiologist consensus, not gold-standard follow-up.

Key Metrics to Evaluate a Radiology AI Tool

| Metric | What It Tells You | Clinician Tip |

|---|---|---|

| Sensitivity | Ability to detect true positives | High is good, but check specificity too |

| Specificity | Ability to exclude false positives | Crucial for avoiding unnecessary workups |

| AUC-ROC | Overall diagnostic ability | Values closer to 1.0 are better |

| PPV/NPV | Positive/negative predictive values in practice | Depends on disease prevalence |

| F1 Score | Balance between precision and recall | Useful in unbalanced datasets |

| External Validation | Performance on independent datasets | Critical for real-world generalization |

| Bias & Fairness | Performance across age, gender, ethnicity | Check for equity in predictions |

| Regulatory Approval | FDA/CE-marked or investigational? | Know what’s cleared for use |

Current Research and Future Directions

Recent studies have highlighted both the promise and the pitfalls of AI in radiology:

- McKinney et al. (Nature, 2020) showed AI outperformed radiologists in breast cancer detection — but only in certain test sets.

- Oakden-Rayner (Radiology AI, 2020) critiqued unrealistic benchmarks and lack of transparency in many commercial models.

- Topol (JAMA, 2019) called for “augmented intelligence” — focusing on clinician-AI partnership, not replacement.

Future directions include:

- Multi-modal AI: combining imaging with clinical and genomic data.

- Continuous learning systems with real-time feedback loops.

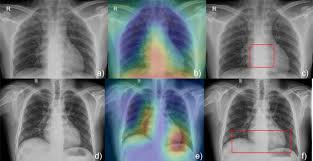

- Explainable AI: making models transparent and understandable.

- Federated learning: training across institutions without sharing patient data.

Final Thoughts: What Clinicians Should Ask Before Using AI in Radiology

- What clinical problem does it really solve?

- How was the algorithm trained and validated?

- How does it perform in your patient population?

- What is the cost — and what’s the return (clinical or operational)?

- Who is legally responsible for its outputs?

“AI should be a second reader, not the final voice.”