Artificial Intelligence is increasingly being marketed as a solution for identifying early warning signs of clinical deterioration — such as sepsis, cardiac arrest, or respiratory failure. While the promise of anticipating critical illness is compelling, clinicians must approach these tools with caution, especially when the marketing outpaces the science.

This page highlights what these AI systems claim to offer, why FDA “device approval” is often misunderstood, and what you need to know before integrating such tools into your clinical workflows.

What Early Warning AI Systems Claim to Do

Startups in this space are often built around the following features:

- Predictive Alerts

- Sepsis onset prediction 6–12 hours before clinical signs

- Cardiac arrest or rapid deterioration alerts

- Risk scoring based on real-time EHR data

- EHR Integration & Monitoring

- Continuous surveillance of vital signs, labs, and notes

- Smart triaging in wards and ICUs

- “Smart alarms” to reduce alert fatigue

- Workflow Support

- Suggested interventions or checklists

- Escalation pathways

- Audit and documentation support for QI purposes

The Regulatory Reality: FDA-Cleared ≠ AI-Approved

Many companies advertise their product as FDA-approved, but in reality:

- The hardware (monitor, device) may be FDA-cleared.

- The AI model (predictive algorithm) is often not explicitly approved as a standalone medical decision tool.

- Some approvals fall under “clinical decision support” (CDS) tools, which have fewer regulatory requirements if they don’t directly drive medical decisions.

Example: A system may be FDA-cleared for “displaying early warning scores,” but not for actually diagnosing or predicting sepsis.

Red Flag: If the startup says “FDA-cleared AI for sepsis prediction,” ask: Which part is cleared? Under what class? Is it for prediction or just alert display?

Why These Models Can Misfire

Despite bold marketing, many systems:

- Use proprietary algorithms that lack transparency.

- Are trained on historical EHR data with noisy, biased, or inconsistent documentation.

- Trigger alerts too late or too often, leading to clinician desensitization (alert fatigue).

- Have no prospective, peer-reviewed evidence of improving outcomes.

Common Problems:

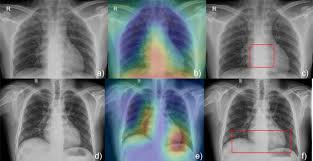

- Lack of interpretability (“black box” predictions).

- Triggering based on data artifacts (e.g., transient vital sign changes).

- Overreliance on static rules with minimal patient context.

Key Metrics & Parameters for Evaluation

| Metric | Why It Matters | What to Watch |

|---|---|---|

| Positive Predictive Value (PPV) | Tells you how often an alert is actually correct | Often low in real-world settings |

| False Alarm Rate | Alerts that are incorrect or clinically irrelevant | High rate = alert fatigue |

| Time to Event | How early the alert fires before clinical recognition | Earlier isn’t always better if not actionable |

| Clinician Override Rate | Frequency of alerts being ignored or bypassed | High rate = trust issue |

| Outcome Impact | Does the system reduce mortality, ICU transfers, etc.? | Most tools lack outcome data |

| Auditability | Can the logic behind the alert be traced or reviewed? | Black box = medicolegal risk |

Current Research and Industry Trends

- Nemati et al. (Crit Care Med, 2020): Found AI sepsis alerts often lacked context and led to excessive unnecessary treatment.

- Sendak et al. (NPJ Digit Med, 2020): Urged transparency in AI models, noting widespread deployment without peer-reviewed evidence.

- FDA’s 2021 Discussion Paper: Highlights that adaptive/learning algorithms may need future regulation as they evolve post-deployment.

Emerging Areas:

- Explainable AI (XAI) for trust-building

- Context-aware alerts (e.g., integrating clinician judgment)

- Adaptive models that learn from local patient populations

Clinical Questions to Ask Before Adopting

- What clinical problem is the model designed to solve?

- Was it trained and validated on data similar to your patient population?

- What evidence (peer-reviewed, prospective) supports its effectiveness?

- What happens after an alert? Is it clinically actionable?

- Is the system explainable, auditable, and clinician-controllable?

“An early warning system that clinicians can’t understand, trust, or act upon is not a tool — it’s a liability.”